I recently read an article in ComputerWeekly describing how TFL had redesigned their website using HTML5 to optimise performance across multiple device types. I was interested to see how the new site was handling the likely increase in traffic due to the tube strike.

Prior to the HTML5 re-write the last major redevelopment of this site had been in 2007, well before the proliferation of mobile devices, now used daily to check for travel updates or plan journeys. 75% of Londoners visit the TFL website regularly and there are 8 million unique visitors per month.

I was in London earlier this week so I, along with millions of other commuters, wanted to keep up to date with the news of the tube strike. I, like many others, turned to my smartphone for answers. The site performed well on my Android phone, so I wondered whether the increase in traffic had caused any performance degradation.

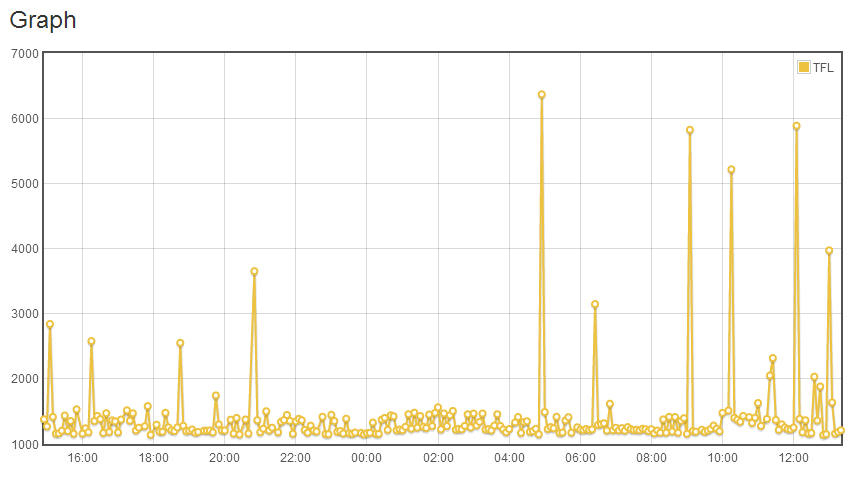

Although occasional spikes in response times were observed (which is common when monitoring in this way); on the whole the site remained responsive throughout the day. Average response times appear no slower today than they were last week (the chart below shows response times in milliseconds).

If only more of the sites that I visit regularly performed as well as this.

Get in touch for more information about our “Test The Market” monitoring application and how it can give you insights into your own website performance and see how your performance compares with your competitors.

See more articles like this, and download the response time report at:

http://blog.trustiv.co.uk/2014/02/tfls-new-website-coping-well