I’ve been working for some time on a project which is deploying a complex application to a client’s servers. This project relies on Powershell scripts to push zip files to servers, unzip those files on the servers and then install the MSI files contained within them. The zip files are frequently large (up to 900MB) and the time taken to unzip the files is causing problems with our automated installation software (Tivoli) due to timeouts.

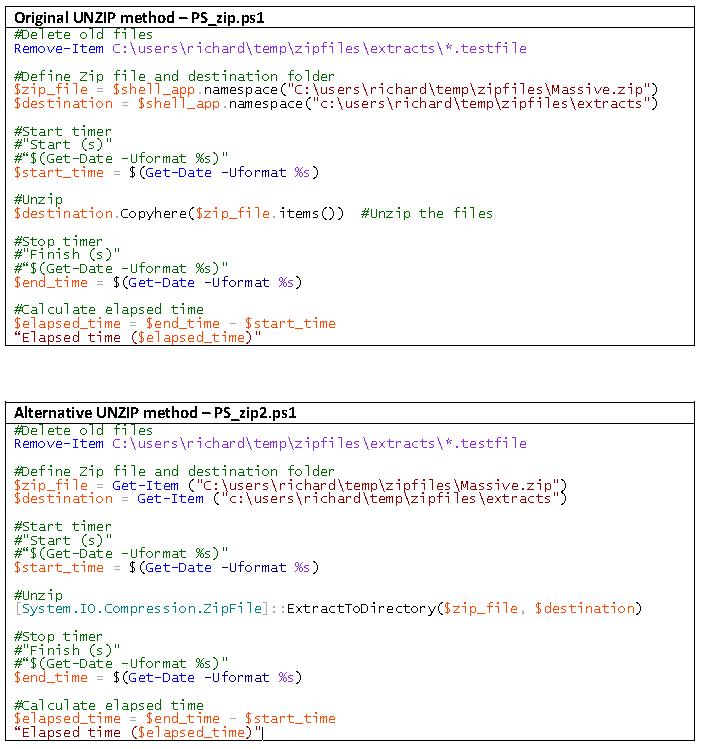

The scripts are currently unzipped using the Copyhere method.

Simple tests on a Windows 8 PC with 8GB RAM and an 8 core processor although a single SATA hard drive show that this method is “disk intensive” and disk utilisation as viewed in Task Manager “flatlines” at 100% during the extraction.

I spent some time looking at alternatives to the “Copyhere” method to unzip files to reduce the time taken for deployments and reduce the risk of Tivoli timeouts which were affecting the project.

Method

A series of test files were produced using a test utility (FSTFIL.EXE), FSTFIL creates test files made up of random data. These files are difficult to compress due to the fact that they contain little or no “whitespace” or repeating characters, similar to the already compressed MSI files which make up our deployment packages.

Files were created that were 100MB, 200MB, 300MB, 400MB and 500MB. Each of these files were zipped into similar sized ZIP files. As well as this a single large ZIP files containing each of the test files was also created.

Tests were performed to establish the time taken to decompress increasingly large ZIP files.

Test were performed to establish whether alternative decompression (unzip) techniques were faster.

Observations

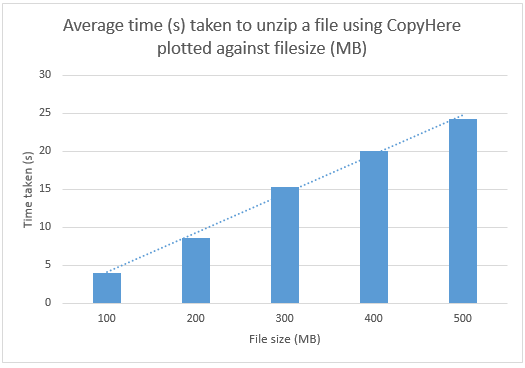

The effect of filesize on CopyHere unzips

Despite initial observations, after averaging out the time taken to decompress different sized files using the CopyHere method the time taken to decompress increasingly larger files was found to be linear.

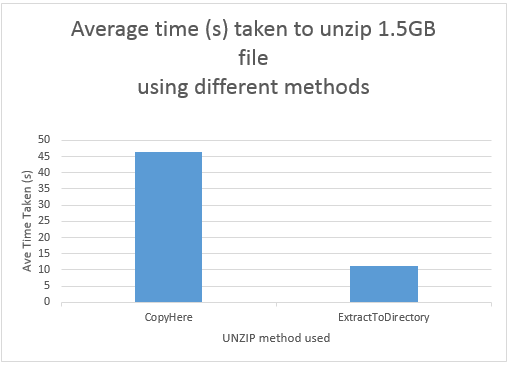

The difference between CopyHere and ExtractToDirectory unzips

To do this comparison, two PowerShell scripts were written. Each script unzipped the same file (a 1.5GB ZIP file containing each of the 100MB, 200MB, 300MB, 400MB and 500MB test files described earlier). Each script calculated the elapsed time for each extract, this was recorded for analysis.

Unzips took place alternately using one of the two techniques to ensure that resource utilisation on the test PC was comparable for each test.

No detailed performance monitoring was carried out during the first tests, but both CPU and disk utilisation was observed to be higher (seen in Task Manager) when using the CopyHere method.

Conclusion

The ExtractToDirectory method introduced in .Net Framework 4.5 is considerably more efficient when UNZIPPING packages. Assuming that this method is not available, alternative techniques to unzip the packages, possibly including the use of “self extracting .exe” files, the use of RAM disks or memory-mapped files to remove disk bottlenecks or more modern decompression techniques may reduce the risk of Tivoli timeouts and increase the likelihood of successful deployments.

Powershell scripts used

Hi!

Why aren’t you using the cmdlet “Measure-Command” instead of starting a timer to measure the time elapsed for the command to complete?

Hi Alexander,

I hadn’t heard of the “Measure-Command” option until now. I’m not an expert in Powershell, but I’m muddling through.

I’ll use that from now on when I need to measure elapsed time.

Thanks for the advice.

All the best,

Richard